Depth Up-Scaling

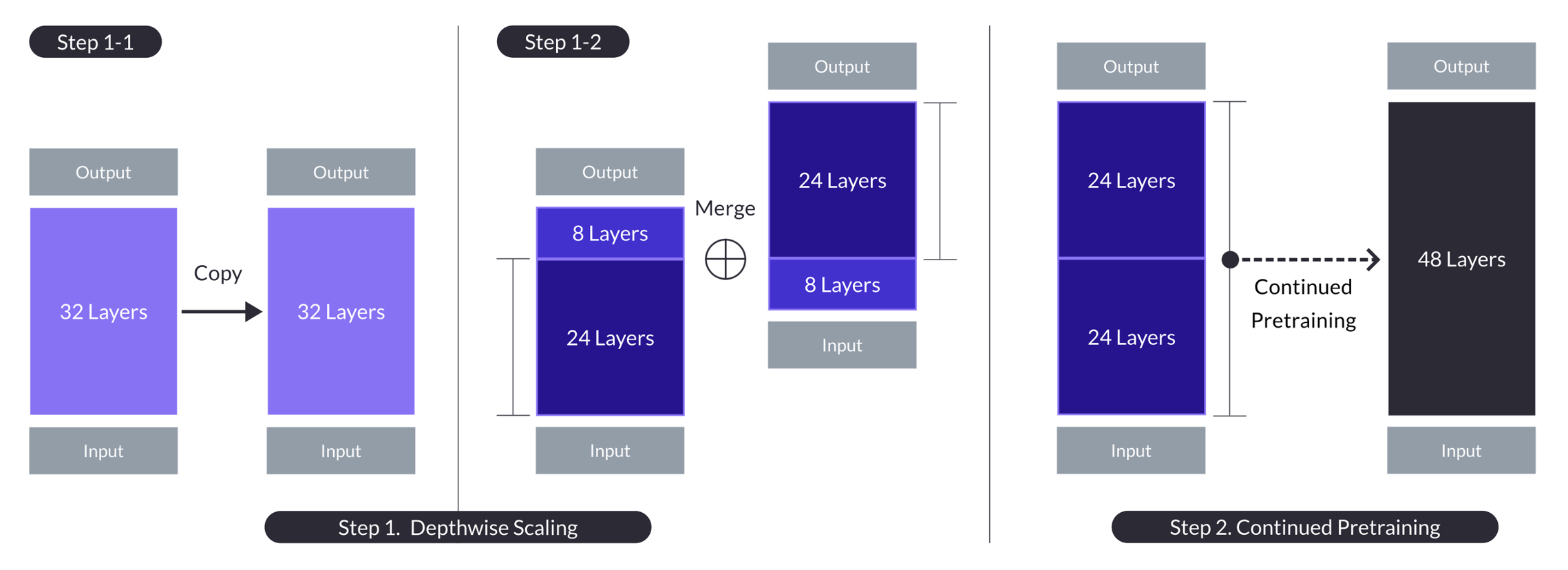

The SOLAR10.7B paper introduces Depth Up-Scaling (DUS) as a competitor to mixture-of-experts (MoE). It is based on a simple idea: take an existing model, double its layers, and continuously pretrain it so it gives better results. The problem lays in the concatenation of the last layer of the original model with the first layer of its duplicate. The “layer distance” is greater than 1 at the seam where the two models meet. To make it easier for the resulting model to be continuously pretrained, the authors remove the final \(m\) layers of the original model and the initial \(m\) layers of the duplicate. Using this techinque, after the concatenation, the model suffers an initial performance drop, but rapidly recovers during the coninued pretraining phase.

Although the authors don’t mention it, removing the inital and final layers makes sense from a mechanistic interpretability point of view (see the transformer circuits thread from Anthropic), given that the first and final layers have specialized tasks.

The authors use Mistal 7B, with a 32-layer Llama 2 architecture, as their base model. They take \(m=8\), so that the resulting model has \(2*(32-8)=48\) layers. They do not try other options for $m$, so there surely will be improvements to the method in the near future.

Nous Research has already trained a Hermes 2 model on top of SOLAR10.7B, and it seems to work really well.